这段时间项目告一段落,开始回过头来补充完善一些资料,包括数据库设计和API文档。以前都是手写文档,需要手动去调整格式,接口有更新还得改文档,测试也要单独写代码。

有了swagger这些工作全部省掉了,只需要在写接口的时候多加点注解就搞定。

废话不多说直接进入主题,先说下步骤:

1、引入maven依赖

2、利用springboot的config配置方式进行配置

3、在接口上加入swagger注解

4、启动服务进行查看和测试

1、引入maven依赖

io.springfox springfox-swagger-ui 2.6.1 io.springfox springfox-swagger2 2.6.1 org.json json org.springframework.restdocs spring-restdocs-mockmvc test io.springfox springfox-staticdocs 2.6.1 com.alibaba druid 1.0.18

2、利用springboot的config配置方式进行配置

package com.mymall.crawlerTaskCenter.config;import java.util.ArrayList;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.ComponentScan;import org.springframework.context.annotation.Configuration;import org.springframework.web.bind.annotation.RequestMethod;import springfox.documentation.builders.ApiInfoBuilder;import springfox.documentation.builders.PathSelectors;import springfox.documentation.builders.RequestHandlerSelectors;import springfox.documentation.builders.ResponseMessageBuilder;import springfox.documentation.schema.ModelRef;import springfox.documentation.service.ApiInfo;import springfox.documentation.service.ResponseMessage;import springfox.documentation.spi.DocumentationType;import springfox.documentation.spring.web.plugins.Docket;import springfox.documentation.swagger2.annotations.EnableSwagger2; @Configuration@ComponentScan@EnableSwagger2public class SwaggerConfig { @Bean public Docket petApi() { //自定义异常信息 ArrayList responseMessages = new ArrayList () { { add(new ResponseMessageBuilder().code(200).message("成功").build()); add(new ResponseMessageBuilder().code(400).message("请求参数错误").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(401).message("权限认证失败").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(403).message("请求资源不可用").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(404).message("请求资源不存在").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(409).message("请求资源冲突").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(415).message("请求格式错误").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(423).message("请求资源被锁定").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(500).message("服务器内部错误").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(501).message("请求方法不存在").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(503).message("服务暂时不可用").responseModel(new ModelRef("Error")).build()); add(new ResponseMessageBuilder().code(-1).message("未知异常").responseModel(new ModelRef("Error")).build()); }}; return new Docket(DocumentationType.SWAGGER_2) .apiInfo(apiInfo()) .select() .apis(RequestHandlerSelectors.basePackage("com.mymall.crawlerTaskCenter.web.rest"))//扫描的API包 .paths(PathSelectors.any()) .build() .useDefaultResponseMessages(false) .globalResponseMessage(RequestMethod.GET, responseMessages) .globalResponseMessage(RequestMethod.POST, responseMessages) .globalResponseMessage(RequestMethod.PUT, responseMessages) .globalResponseMessage(RequestMethod.DELETE, responseMessages); } private ApiInfo apiInfo() { return new ApiInfoBuilder() .title("MyMall 爬虫任务中心Restful APIs") .description("author:彭小康 2017年8月28日") .version("2.0").build(); } } 3、在接口上加入swagger注解

package com.mymall.crawlerTaskCenter.web.rest;import io.swagger.annotations.Api;import io.swagger.annotations.ApiOperation;import io.swagger.annotations.ApiParam;import java.util.List;import javax.servlet.http.HttpServletRequest;import org.apache.commons.lang.StringUtils;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.http.MediaType;import org.springframework.web.bind.annotation.RequestMapping;import org.springframework.web.bind.annotation.RequestMethod;import org.springframework.web.bind.annotation.RestController;import com.alibaba.fastjson.JSON;import com.mymall.crawlerTaskCenter.domain.CrawlerJob;import com.mymall.crawlerTaskCenter.log.LogsManager;import com.mymall.crawlerTaskCenter.service.CrawlerJobService;@RestController@RequestMapping(value = "/job")@Api(value = "爬虫任务管理", description = "爬虫任务管理API", tags = "CrawlerJobApi", consumes = MediaType.APPLICATION_JSON_VALUE, produces = MediaType.APPLICATION_JSON_VALUE)public class CrawlerJobController { private static org.apache.logging.log4j.Logger logger = LogsManager.getLocalLogger(CrawlerJobController.class.getName(),"job"); @Autowired CrawlerJobService crawlerJobService; /** * 获取最新任务 * @return */ @RequestMapping(value = "/getJobs", method = RequestMethod.GET) @ApiOperation(value = "getJobs 获取最新任务", notes = "requires job number") public List getJobs( @ApiParam(required = false, name = "jobNum", value = "任务数量,如果不传该参数则默认给20条,如果任务中心少于20条则全部返回") Integer jobNum, @ApiParam(required = false, name = "jobType", value = "任务类型:platform-平台,allNetList-全网列表任务") String jobType, @ApiParam(required = false, name = "key", value = "任务key") String key ,HttpServletRequest request) { String ip = request.getHeader("X-Real-IP"); if (StringUtils.isBlank(ip) ||"unknown".equalsIgnoreCase(ip)) { ip=request.getRemoteAddr(); } logger.info(ip+"领取了"+jobNum+"条任务"); return crawlerJobService.getJobs(jobNum, jobType,ip,key); } /** * 提交任务 * @return */ @RequestMapping(value = "/submitJob", method = RequestMethod.POST) @ApiOperation(value = "submitJob 提交任务", notes = "根据id提交任务") public boolean submitJob(@ApiParam(required = true, name = "job", value = "任务id") String job,HttpServletRequest request) { String ip = request.getHeader("X-Real-IP"); request.getParameter("id"); if (StringUtils.isBlank(ip) ||"unknown".equalsIgnoreCase(ip)) { ip=request.getRemoteAddr(); } CrawlerJob cjob=JSON.parseObject(job,CrawlerJob.class); logger.info(ip+"提交了任务,id为:"+cjob.getId()); return crawlerJobService.submitJob(cjob); } /** * 创建任务 * @return */ @RequestMapping(value = "/createJob", method = RequestMethod.POST) @ApiOperation(value = "createJob 创建任务", notes = "根据json创建任务") public boolean createJob(@ApiParam(required = true, name = "taskJsonStr", value = "任务json字符串") String taskJsonStr){ return crawlerJobService.createJob(taskJsonStr); } /** * 更新任务 * @return */ @RequestMapping(value = "/updateJob", method = RequestMethod.POST) @ApiOperation(value = "updateJob 更新任务", notes = "requires job") public boolean updateJob( @ApiParam(required = false, name = "job", value = "需要更新任务的json格式数据") String job ,HttpServletRequest request) { String ip = request.getHeader("X-Real-IP"); if (StringUtils.isBlank(ip) ||"unknown".equalsIgnoreCase(ip)) { ip=request.getRemoteAddr(); } logger.info(ip+"更新任务:"+job); return crawlerJobService.updateJob(job); }} 4、启动服务进行查看和测试

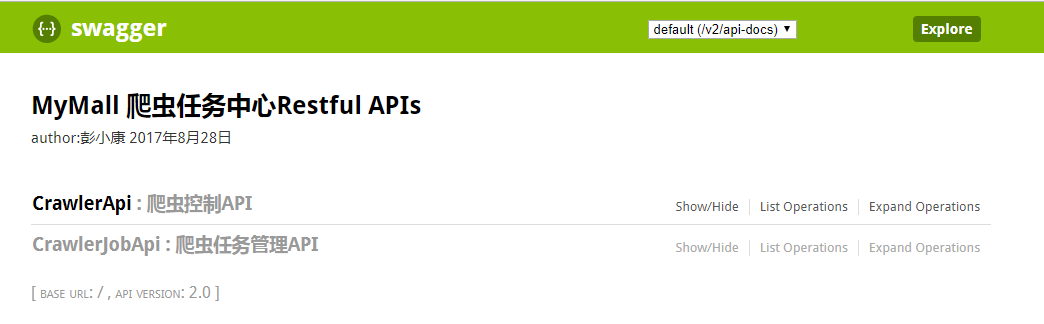

根据自己服务时间发布的地址进行访问,我的地址是:http://localhost:8082/swagger-ui.html

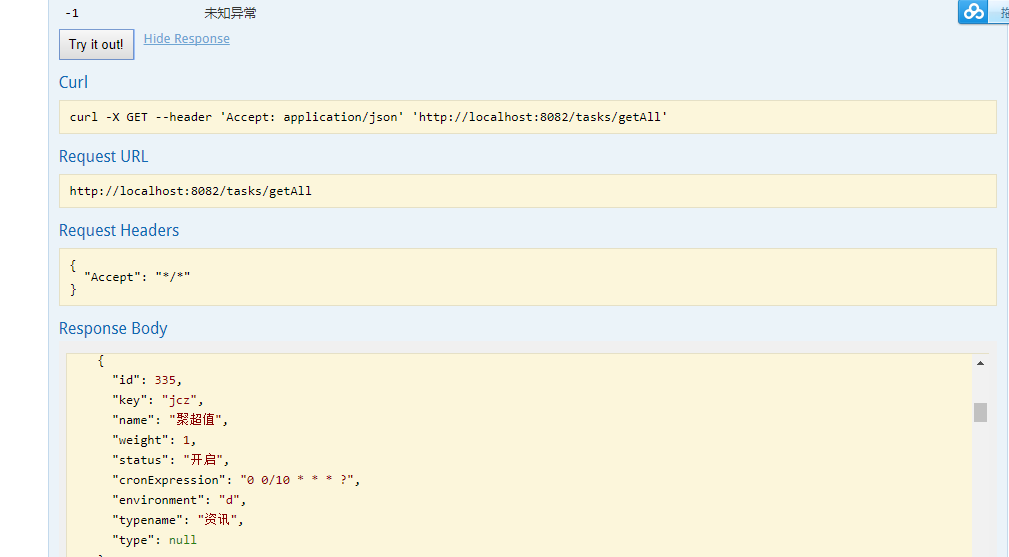

展开某个接口,提供了测试方法,填写对应参数,点击try it按钮即可看到运行结果